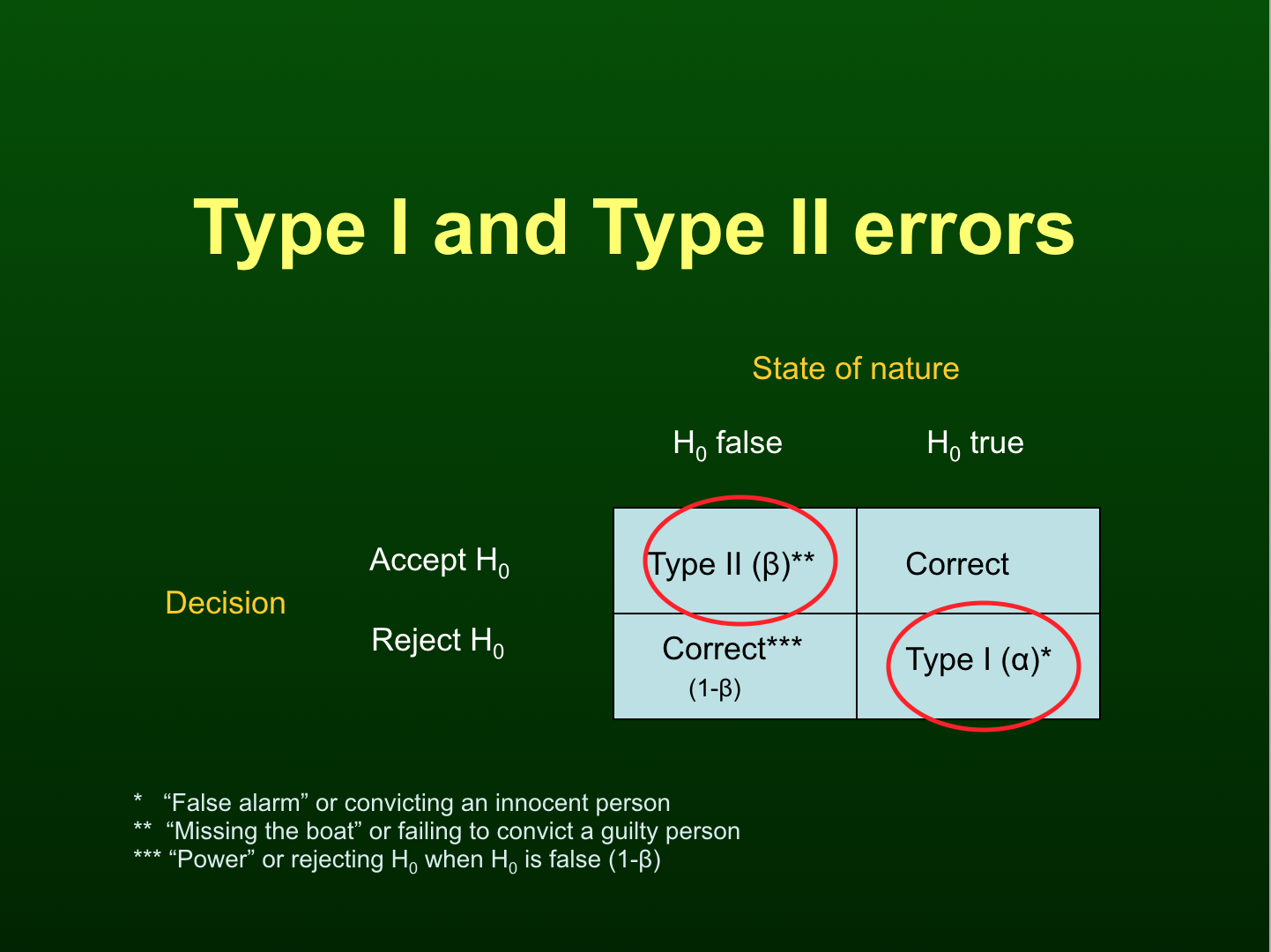

Recall that we test the null hypothesis, H0. If a difference actually does not exist, H0 would be true, and it would be correct to accept it. Conversely, if a difference actually does exist, H0 would be false, and it would be correct to reject it. But, alas, mistakes happen! In statistics, the probabilities of making these mistakes have been given special names and Greek letters. The Type I or ‘α’ error is the probability of rejecting H0 when, in fact, H0 is true (a “false alarm”). The Type II or ‘β’ error is the probability of accepting H0 when, in fact, H0 is false (“missing the boat”).

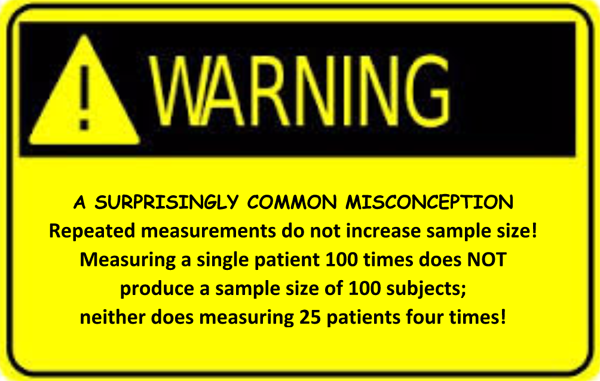

Most of us know α simply as “the .05 level of significance,” yet it is really an indication of an investigator’s tolerance for committing a Type I error. For instance, how willing are you to say that an anesthetic causes bradycardia when it really doesn’t? Investigators get to set the value of α, though besides .05, about the only other α that is commonly used is .01. The Type II error is much trickier because investigators cannot declare a value for β, which is affected by at least six factors. The most important of these is the sample size — β is smaller with larger sample size. Since 1-β is power, keeping β as low as possible is a good thing. Other factors that lower β include larger discrepancy between what is hypothesized and what is true (“effect”), precisely measured variables (less “rubber in the yardstick”), dependent (e.g., matched or paired) samples as opposed to independent (e.g., randomly assigned) samples, specifying larger α (even though α + β does not equal 1), and 1-tailed as opposed to 2-tailed alterative hypotheses. Next month, statistics in small doses will explore sample size and its importance in statistical decision making.